Live decisions reward structure over instinct. A match can turn in minutes, and the worst errors arrive when attention is scattered, notes are messy, and emotions run the show. A simple framework protects judgment under pressure – define what information is trusted, organize how it is captured, and apply a short checklist before acting. The outcome is not certain. It is clarity about what is known, what is assumed, and what must be ignored in the heat of play.

In-play work blends observation with discipline. Scoreboard shapes, rotation patterns, and match-ups reveal more than just raw pace or a single highlight clip. Good data hygiene ensures those signals are recorded consistently. A tight routine then turns the record into decisions that can survive randomness – and be audited later.

A One-Minute In-Play Checklist

A checklist keeps thinking short and sharp when the run rate climbs or the clock drops. These prompts fit inside a minute and prevent impulsive moves.

- Confirm the baseline. Note the current score, resources remaining, and the next three opportunities to accelerate.

- Identify the plan on both sides. Summarize field shapes or formations, and name the likely tactic for the next phase.

- Test the read. Look for one piece of counter-evidence. If it exists, slow down.

- Update risk limits. Recalculate exposure after recent swings. Nothing proceeds if a cap is hit.

- Decide and document. Record the action and the reason in one sentence. Future reviews rely on this line.

Checklists work because they standardize the moment before an action. Attention shifts from noise to what matters, making decisions comparable across matches.

Data Hygiene That Actually Holds Up

Data hygiene is not about volume. It is about repeatable inputs and clean labels. Start with a compact template – timestamp, state of play, key change, intended reaction. Notes should favor facts: field movement, rotation choice, pace change, or a clearly defined scoreboard window. Subjective impressions are allowed, yet they must be tagged as such. When two observers share a log, agreement rises when every entry answers the same three questions – what changed, why it likely changed, and what response it demands.

Teams that track live decisions sometimes sanity-check their logs against how football and kabaddi prices typically react on trusted mobile platforms, using that view as context rather than a trigger. For readers who want a high-level look at where in-play markets for these sports are presented and discussed on a mobile interface, a relevant example is shown here, and then the focus can return to disciplined note-taking and applying the checklist to the next passage of play.

Noise creeps in when multiple dashboards compete for attention. The solution is to determine upfront which two or three metrics drive decisions. Everything else becomes situational. A stable template also improves post-match analysis, making it clear whether a loss came from variance or from a poor read.

Reduce Friction, Reduce Errors

Friction destroys judgment. Every additional screen, tab, or chat thread pulls attention away from the field. A lean setup wins – one main display for the broadcast, one smaller screen for the log and scoreboard, and a single communications channel for the team. Visual clutter is trimmed by hiding non-essential panes and disabling notifications. Audio cues can help during chaotic sequences, but only if they are narrowly targeted to events that truly require intervention.

Timeboxing prevents drift. Decision windows should be explicit – at the start of a phase, during a break, or immediately after a significant event. Acting outside those windows invites overreaction. When a plan requires new information, a hold is better than a guess. The framework respects the difference between absence of data and negative data – no message is not the same as a bad message.

Finally, pre-built defaults protect against hurry. If a situation matches a documented pattern, the system calls for the default response. Deviations are allowed only with a clearly recorded reason. That constraint keeps judgment consistent across long tournaments or busy weekends.

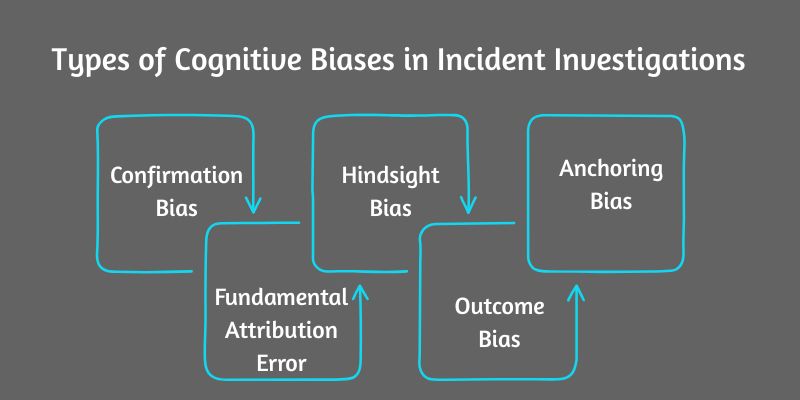

Cognitive Biases That Ambush Live Calls

Biases do not disappear under pressure. They get louder. A few patterns account for most in-play misreads.

Recency bias turns the last over or play into a story. The antidote is to anchor to the three-window view – a short past, the present, and a near future. If a single burst conflicts with the broader shape, patience is warranted. Confirmation bias filters out signals that contradict the pre-match plan. A forced entry to “prove the read was right” usually compounds loss. Incorporate a formal search for disconfirming evidence before taking action. Availability bias exaggerates vivid events – a spectacular catch, a drop, a missed sitter – while ignoring quieter control factors like rotation or field density. The checklist forces those quieter factors back into focus.

The sunk-cost trap is another classic. Exposure already spent is not a reason to add exposure. A good framework treats every decision as fresh, constrained by current risk limits. Overconfidence often appears after an early edge. Strict caps and pre-agreed cool-down periods protect against spirals, even when the read remains strong.

Decisions That Survive Randomness

A robust in-play framework accepts variance as a feature, not a bug. The goal is not to predict every twist. It is to ensure each action is justified by observable signals and recorded in a way that can be reviewed. That review loop then strengthens the next performance. Patterns that repeatedly fail are retired. Signals that repeatedly lead to accurate reads are promoted to primary status.

A small set of habits keeps the system honest. Keep a running tally of decisions that were correct for the stated reasons, regardless of outcome. Separate luck from process. Use blind reviews where the reviewer does not know the result when judging the quality of the decision. Iterate templates slowly. Stability reveals whether changes help or simply create more places for error.

In-play work rewards calm structure. A minute of checklist discipline reduces rash moves. Clean logs make later analysis meaningful. Awareness of bias keeps attention on the field rather than the story in a viewer’s head. With those elements in place, decisions hold their shape when the match speeds up and the noise grows. The framework does not guarantee a perfect read. It guarantees a consistent one – the kind that holds up across a long season and builds trust in the next live call.